Trusted AI - Frameworks for Enabling Trusted AI

1 Introduction

One of the motivations behind the TrustedAI research consortium is to translate cutting edge Artificial Intelligence (AI) and Machine Learning research into conceptual frameworks and concrete tools to facilitate the trusted adoption of AI based technologies by the United States Department of Defense using the United States Navy as a concrete test case. The Frameworks Project was intended to be a conduit for integrating both a set of conceptual best practices for use when developing, evaluating and deploying AI technologies, and a set of software tools that enable the conceptual frameworks. To facilitate adoption of both “frameworks”, the project looked to successful software engineering tools and principles such as Continuous Integration/Continuous Delivery (CI/CD) tools that widely adopt software version control systems such as git as well as software repositories like GitHub. This “GitOps” approach is being increasingly adopted in private sector software projects and facilitates team based software development, automated testing and deployment, and issue tracking, collaboration and compliance. Given that AI software is currently implemented in classical “Software 1.0” written in languages like Python, C++, etc, starting with trusted engineering of the traditional software stack is foundational to the practice of TrustedAI. We also recognize that there is a “AI software” lifecycle akin to the CI/CD lifecycle in traditional software engineering that correspond to the “outer ring” in Figure 1 that is Development of AI software, Use of that software in real world deployments, Analysis of the AI software performance i.e. —Trustworthiness—, and Re-design or Re-training of AI to better meet the underlying system requirements. The GitOps approach also has the advantage of facilitate the tracking of provenance information that, at the most general level, Trustworthy AI should have the attributes of being lawful, ethical and robust throughout the AI System Lifecycle (Smuha, n.d.).

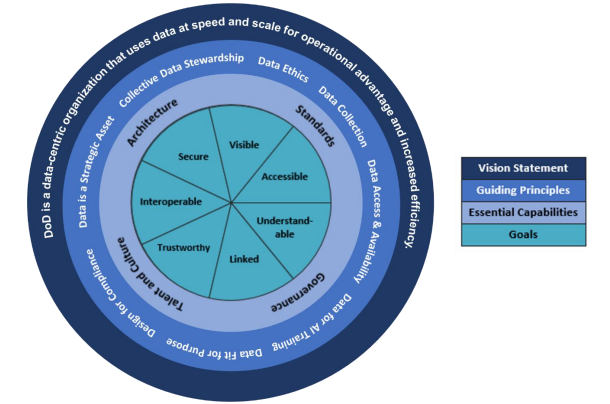

However, AI software is yet fundamentally different from traditional software implementations because the software behaviors are learned behaviors, not behavior encoded by a programmer in the program through some algorithm. This new type of software has been termed “Software 2.0” by some of the AI community and is based on the observation that the programming paradigm is being fundamentally altered from implementation of algorithms in the form of code to labeling, curating and engineering data that will be used to define software behavior that integrates into a Chain of Trust (Toreini et al. 2020) along the ML Pipeline. This observation of the critical nature of training data in the AI based software development process has lead the “Data-centric” AI discipline that aims to create principles and best practices behind systematically engineering data used to construct AI software. Data-centric AI also reflects the United States Department of Defense Data Strategy (“DoD Data Strategy: Unleashing Data to Advance the National Defense Strategy” 2020) where data should have the goals of being Visible, Accessible, Understandable, Linked, Trustworthy, Interoperable, and Secure as illustrated in Figure 2.

The Data-centric AI principles form the second foundational pillar for the frameworks in exploring and adopting tools that facilitate this “data and knowledge engineering process” through Data Collection, Data Preparation and Feature Extraction. We believe that Data-centric AI can benifit from knowledge enginnering, where a “formal” conceptual layer that encodes a specification of the human conceptualization with respect to the data is used to give AI deeper context of data meaning (Ilievski et al. 2021). At each stage of the ML Pipeline each of the Six Dimensions of Trust as identified by (Liu et al. 2022) can be applied. In other words, each step of the ML Pipeline should be engineered with respect to Explainability, Safety & Robustness, Non-discrimination & Fairness, Accountability and Auditability, Environmental Well-being and Privacy.

The goal of the Framework tools is to integrate existing software engineering tools and best practices as well as Trusted AI Project outputs with respect to each of these six dimension’s of trust that can be used at each stage of the ML Pipeline to create a “Toolbox” for practitioners and evaluators to utilize in enabling each of the dimensions in a modular set of software frameworks. This Framework Infrastructure connects the various components and toolboxes being developed for the Trusted AI project together into a single coherent system. The system provides standardized methods for storage and version control of code, data and documentation. There is a minimum viable vocabulary to describe experiments, as well as metadata and storage criterion for model/data outputs such as Neural Network weights. Preference is given to Open Source Software and Tools that can either be integrated into the Framework or modified by our software development teams with possible contribution back to their parent project.

2 United States Department of Defense and Trusted AI Frameworks

Part of the Trusted AI Frameworks mission is to help operationalize the DOD vision with respect to “Implimenting Responsible Artificial Intelligence in the Department of Defense” (Hicks 2021) that outlines the core Ethical principles that goveren the “…design, development, deployment, and use of Al capabilities” for the DoD. These core ethical principles demand that AI be Responsible, Equitable, Traceable, Reliable and Governable (Joint Center for Artificial Intelligence 2020). According the guidelines, the foundational tenets for any Responsible AI (RAI) implimentenation will include RAI Governance, Warfighter Trust, AI Product and Acquisition Lifecycle, Requirements Validation, Responsible AI Ecosystem, and AI Workforce. These RAI tenets map to elements of the Conceptual Framework Principles for TrustedAI in the AI System Lifecycle in Figure 1, particularly in establishing Warfighter Trust and informing the AI Product and Acquisition Lifecycle best practices. The RAI guidelines deeply inform both the TrustedAI project as a whole and the TrustedAI Frameworks project particularly in building tools and educating the AI Workforce in both the core ethical principles and RAI tenets. A Data-centric approach is critical implimenting the RAI tenets is forms the core of the Trusted AI Framworks centering on the DoD Data Strategy as both a principle for “long-term data competency grounded in high-quality training datasets” (“DoD Data Strategy: Unleashing Data to Advance the National Defense Strategy” 2020), but also to structure provenance information relative to the AI lifecycle and its connection to RAI. This data strategy is represented in Figure 2 and is a core compentency the TrustedAI set of Frameworks will help to enable.

2.1 Informing DoD Acquisition of AI Technologies

Part of the motivation for the TrustedAI effort was to help inform the procurment and evaluation of AI based technologies by the United States Navy in concert with the RAI and other Department of Defense guidelines. As outlined in (Bowne and McMartin 2022), this will likely require changes in how the DoD procures and evaluates AI technologies as well as changing the legal landscape in terms of licensing and contracting with AI technology suppliers. Changes in this landscape will likely require suppliers to adopt new tooling for provenance capture with respect to data and model to ensure compliance with RAI guidelines. Luckly, as technology development moves toward the “Software 2.0” paradigm, these considerations can be built into the software development infrastructure to help ensure better adherence to the RAI tenets. The Trusted AI Frameworks aim to help inform a preliminary iteration of what these tools and best practices for technology suppliers could look like.

3 Leveraging AI Community Tools

As outlined in the Introduction, part of the goal for the Frameworks project was to identify tools that could be leveraged as part of the AI software lifecycle that provided functionality with respect to the dimensions of trust. One attribute of trust for (open source) software tools is that there is an active community of developers maintaining the tool and providing a broader community of support for using the tools. After initial consultation with the stakeholders and project members, it was decided that Git would be the primary software source control tool and Github as the initial source code repository. Github is provides a commercial software source control and collaborative development environment that is friendly to open source developers in providing free public/private repositories. Other options for git source repositories exist such as Gitlab and the self-hosted Gitlab Community Edition that provide migration paths for git-centric source code repositories if security and privacy requirements require self-hosted options.

One way to enable Data-Centric AI (Liang et al. 2022) within the “GitOps ecosystem” is through the use of Data Version Control (DVC) that facilitates tracking of ML Models and Datasets by direct integration into the git version control system. DVC allows data to be versioned and then stored outside git repositories in a wide variety of data storage systems facilitating the use of larger datasets and storage systems than could be otherwise accommodated by git or through the use of Git Large File Storage. DVC also has tools to help facilitate experiment reproducibility by maintaining information about input data, configuration and code used to run an experiment all within the git source control environment. The general methodology for the core Trusted AI Framework is that all model code, data preparation code, and training workflows are tracked within a Git Repository. Training data, generated models, and analytics are tracked by DVC and enabeling a potentially separate data storage environment using one of the DVC Supported storage types.

4 TAI Projects as Framework Testbeds

Several TrustedAI projects were used as use case/test environments for the Core Framework tools during the first year of TrustedAI. These projects provided different data/machine learning model requirements to explore how effective the tools and methodology are in